Recently I had more to do with vSphere Replication and Site Recovery Manager (more specifically with versions 8.6) and would like to share my experiences with Traffic Separation for vSphere Replication with you.

What is Traffic Separation?

Traffic separation is the possibility of splitting network traffic between different networks/port groups/VLANs and thereby possibly achieving an increase in security and performance.

So why separate?

A vSphere Replication Appliance comes with a (virtual) network adapter by default, and this is used for all network communication types.

These are:

- Management Traffic between vSphere Replication Management Server and vSphere Replication Server, between vCenter and vSphere Replication Management Server

- Replication traffic from source ESXi hosts to the target vSphere Replication Server.

- NFC (Network File Copy) replication traffic from the target vSphere Replication Server to the target ESXi datastores.

This VMware KB article also explains the process very well.

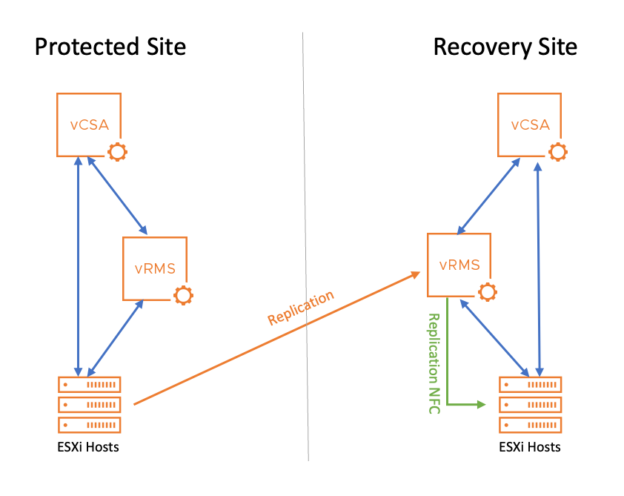

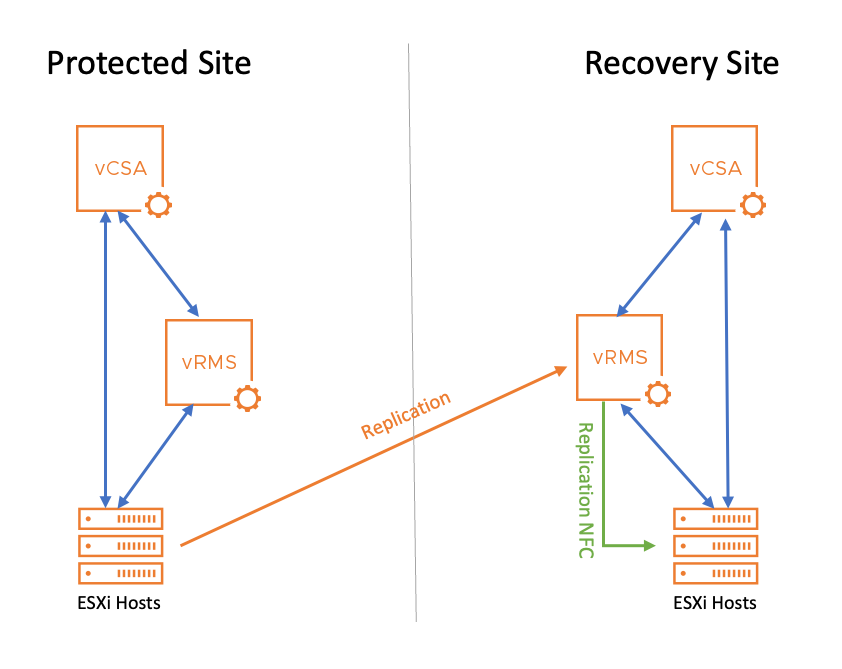

For a better understanding, follow the network traffic in the diagram.

You can see how the data flows from the source ESXI hosts on the left to the target replication appliance (vRMS, orange line) and then from the target vRMS to the target ESXi hosts (green line). The blue lines are the management traffic.

Note: For simplicity, the graph only shows the path in one direction. For a failback and replication in the opposite direction, it would be the other way around.

As already mentioned, all of these types of traffic use the same virtual network adapter of the appliance in the standard configuration, and therefore the same port group and the same VLAN.

Now it may well be that you don’t want to do this, so accordingly: Traffic separation!

Traffic Separation – here’s how

Prerequisites for the following steps are:

- The already deployed vSphere Replication Appliances in source and target vCenter

- Port groups on the virtual switches are ready

I have made it easy for myself with regard to traffic separation, as I only want to separate the management traffic from the entire replication traffic.

Accordingly, I only need one replication port group (here alex-replication) in addition to the management port group (in my case alex-srm).

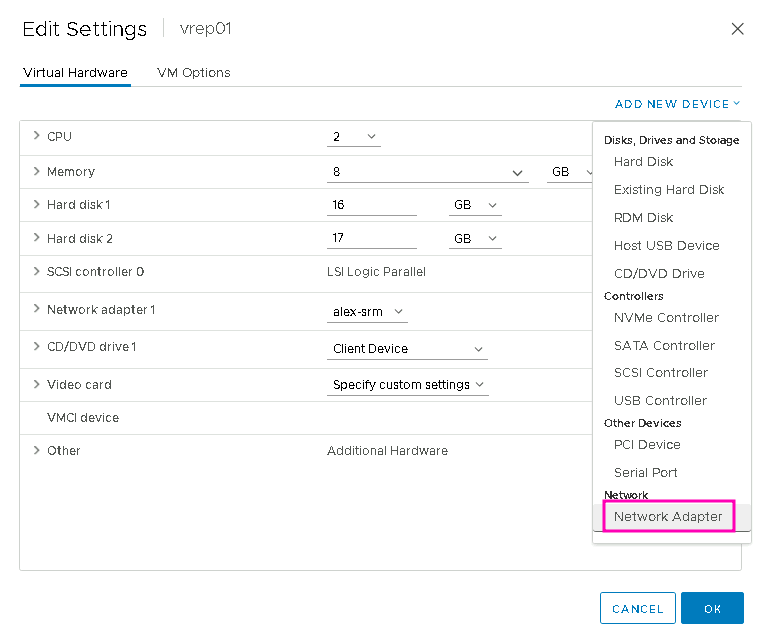

In case you also want to separate the NFC replication traffic, provide another portgroup and add another network card at the part with the network card. #easy!

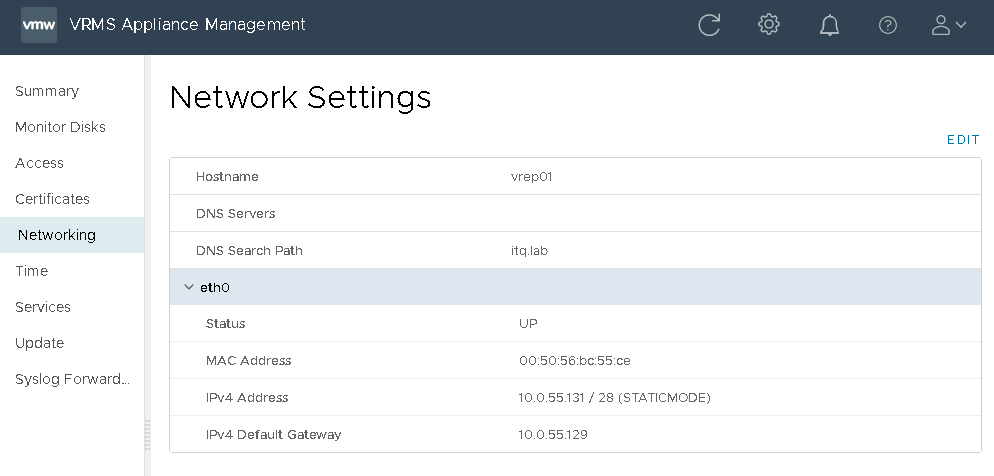

That’s what it looks like when the appliance is installed and before we start with the adjustments:

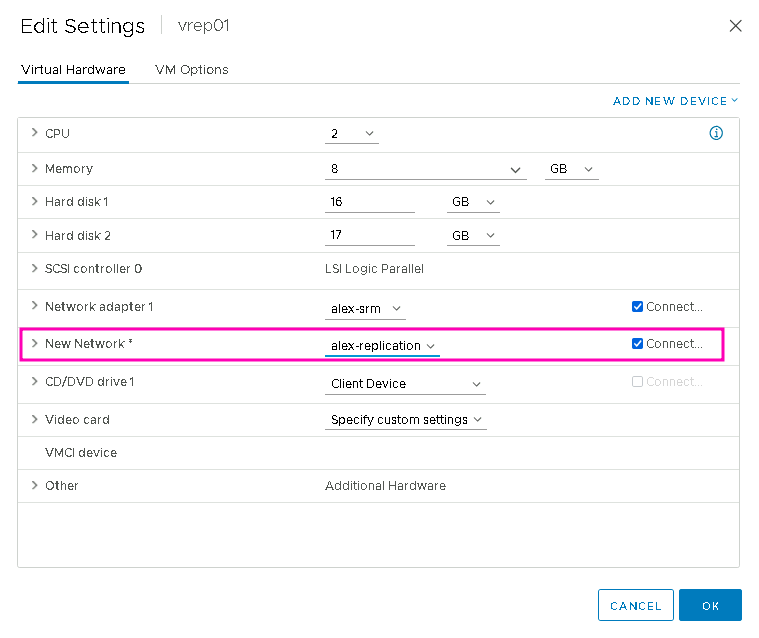

Power off the VM again and add a Network Adapter in the Settings:

Connect the new adapter to the dedicated replication port group:

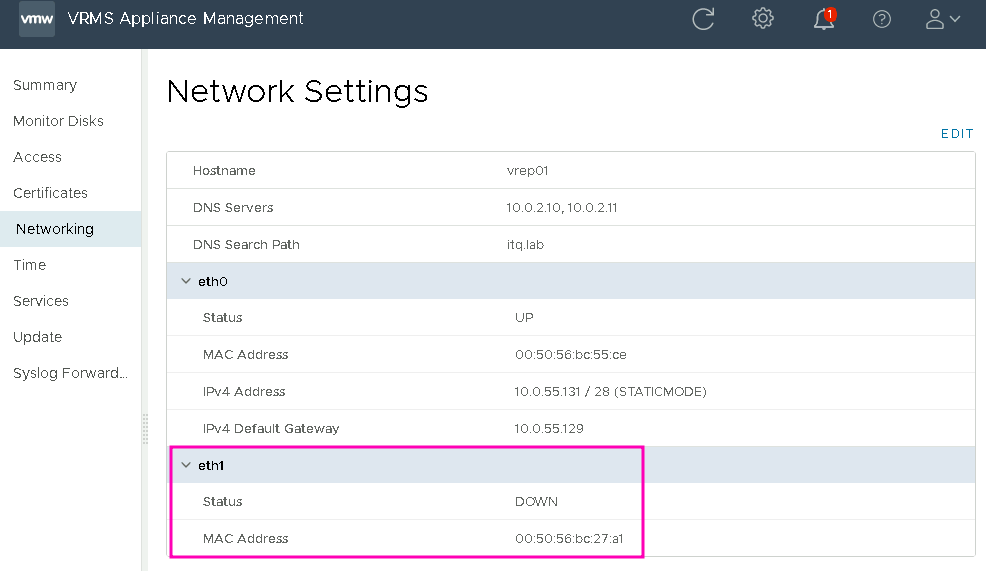

Then power on the VM again, after which it looks like this in the VAMI, a new interface “eth1” has appeared:

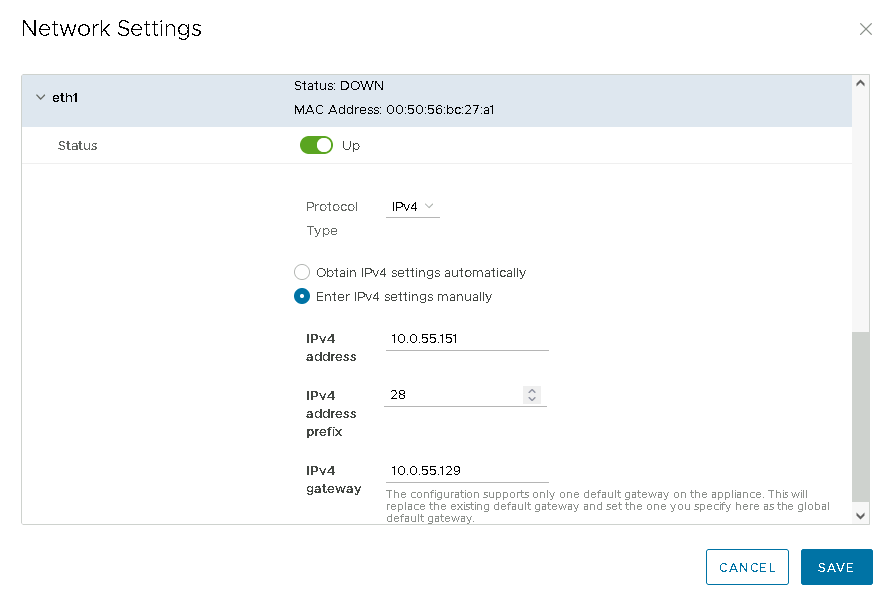

Click “Edit” and then make the settings for “eth1”:

The gateway is required, but beware: the gateway that is set here applies “globally”, i.e. for the whole VM and overwrites the gateway of eth0.

For this reason I have entered the same gateway as for eth0.

If the source and target networks are located in different networks, static routes must be used anyway; these are set exclusively via SSH/CLI.

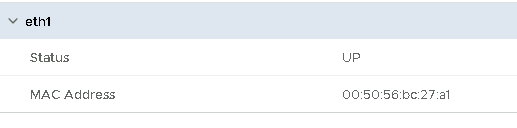

Check again after “Save” and… well, “UP” is good, but what’s going on? Where has my network configuration gone?

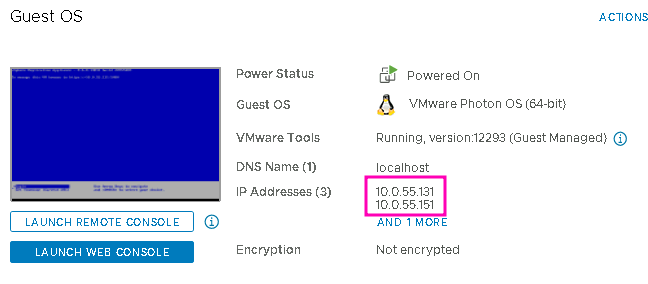

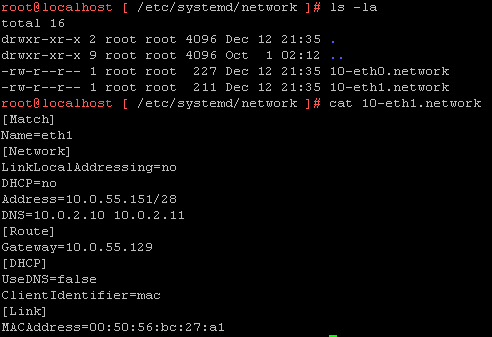

Don’t panic, you can see the new IP in the VM Summary in vCenter…

…or via CLI:

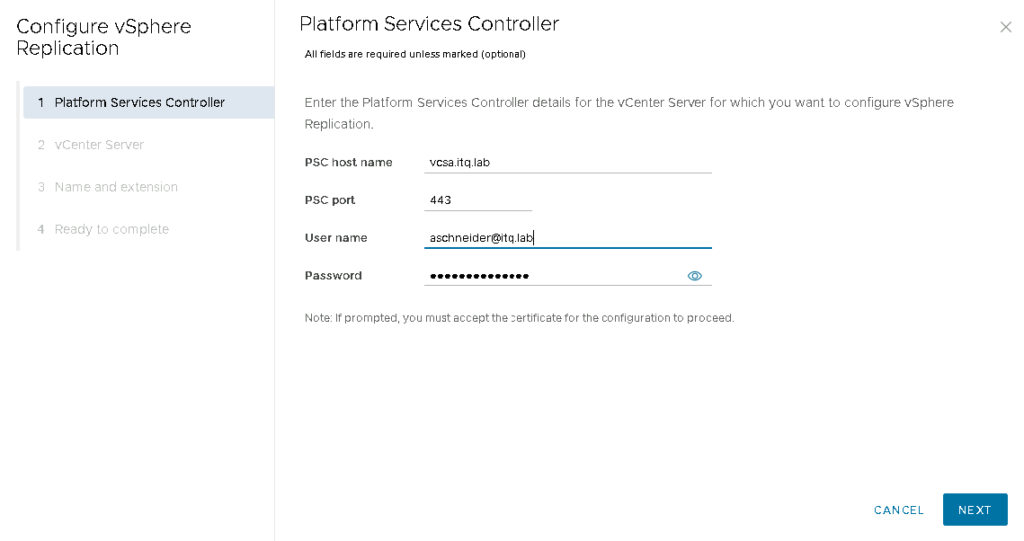

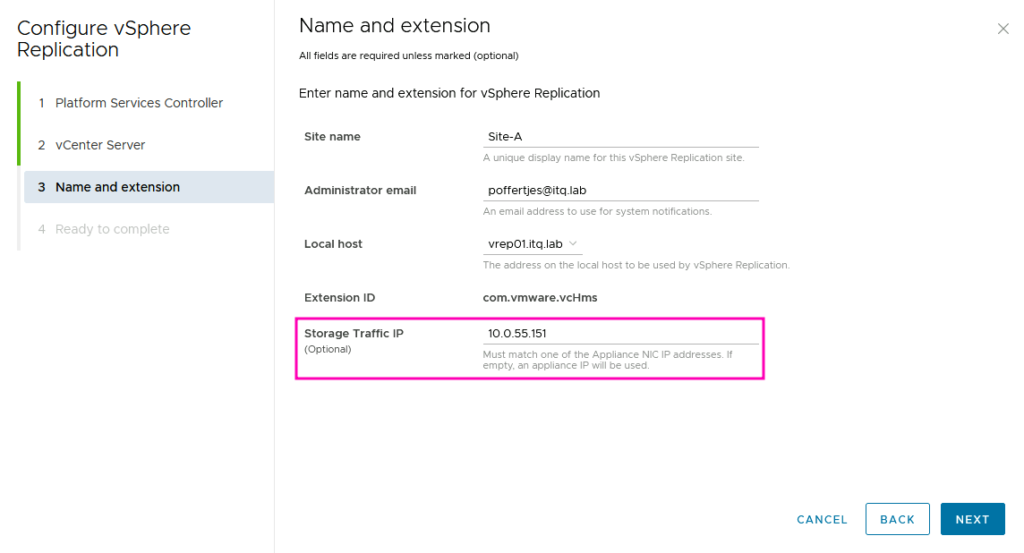

Anyway, don’t let this stop you for the time being and continue and register the appliance in the respective vCenters. Set the IP address of eth1 as the “Storage Traffic IP”:

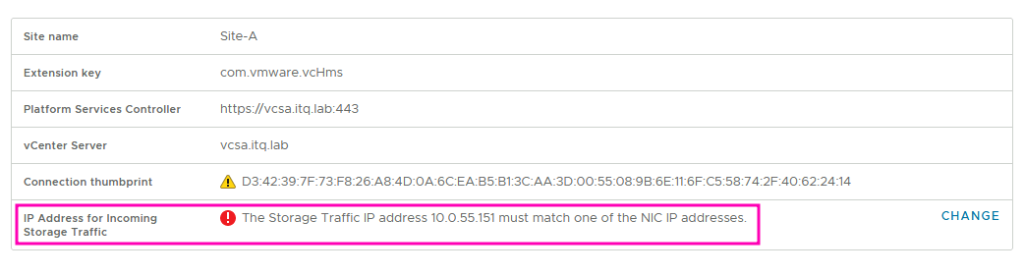

In the Appliance Management interface, the appliance now states in the Summary that the Storage Traffic IP unfortunately does not match any NIC IP. 🤔

Okay?! Again, don’t let it bother you for the time being. Let’s move on!

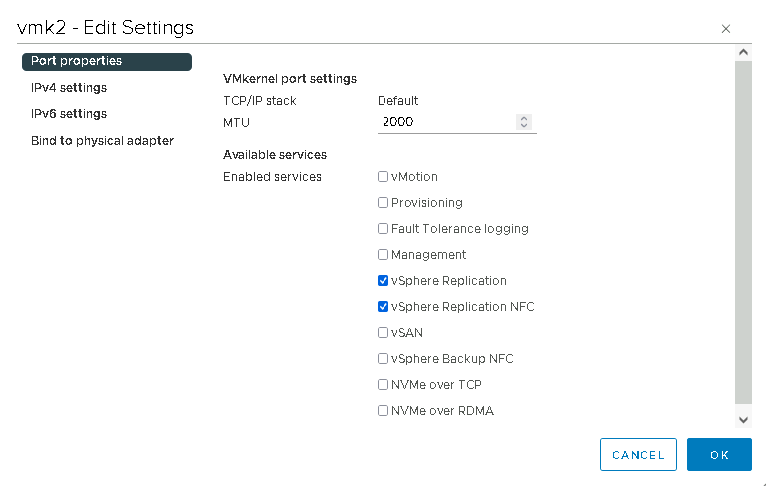

I created a new VMkernel for each of the source and target ESXi hosts and activated the services “vSphere Replication” and “vSphere Replication NFC” (in case anyone has ever wondered what these checkmarks are for, here is the answer 😎)

Why activate both?

Summarised briefly once again:

“Replication” is from source ESXi to target appliance.

“Replication NFC” is from the target appliance to the target ESXi.

If I activate both on the VMkernel, I can then also simply replicate in the opposite direction 👍.

In our lab, I had the luxury of being able to use an NSX segment and have all hosts on the same subnet. This saved me from having to use static routes in the appliances.

(In case someone has separate networks, here is the section from VMware’s documentation for static routing.)

Traffic Separation – the Test

After pairing the two replication appliances via vCenter, I created a replication for a VM to check which interface was actually being used.

I used the following command to display the network statistics of the appliance while replication was active:

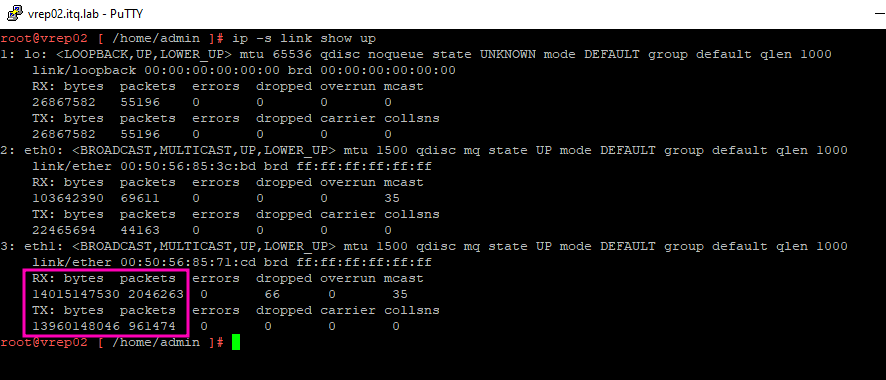

watch -n1 --differences ip -s link show upIt was clearly visible that the traffic runs via eth1:

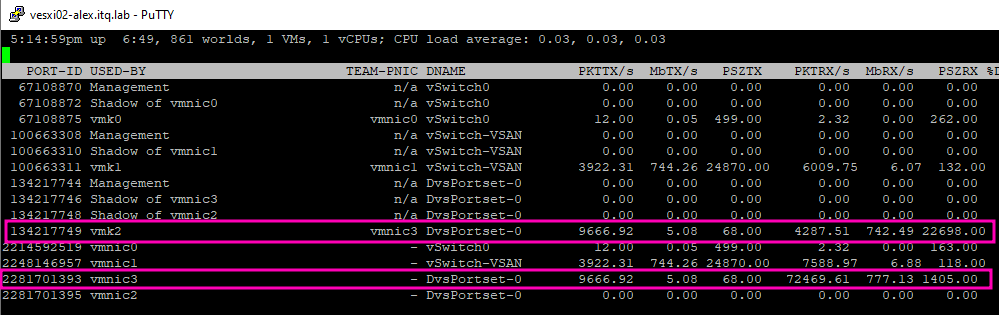

In addition, I checked the network of the target ESXi host via esxtop. Here, too, one could see that the new VMkernel vmk2 and the associated vmnic3 were busy.

Conclusion

Apart from the poor implementation of the feature in the Appliance GUI and the impression that it is rather a hidden feature (at least currently with vSphere Replication 8.6), I see Traffic Separation as quite appropriate. Different networks for different applications/functions are simply part of a clean design, enhance security and performance. So why not for replication data as well.

🐵